Tangible Lights

What am I looking at?

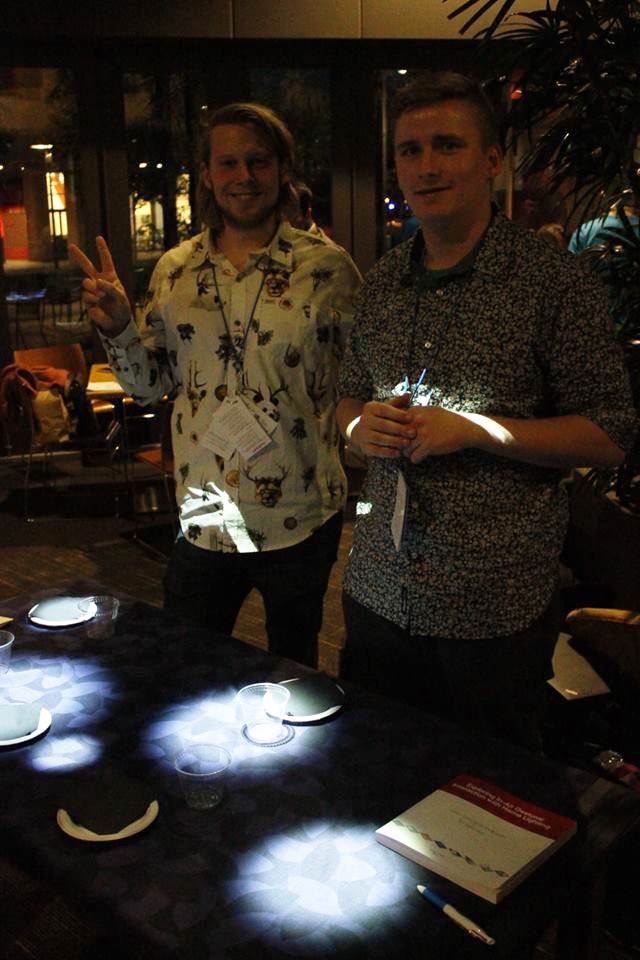

Tangible Lights is a prototype of a concept that enables customisation of the light setting with precise control through several, individual illuminated regions. Each illuminated region can be manipulated freely in the space above the tabletop. A set of interconnected in-air gestures is designed with inspiration from the manipulation of physical objects.

What is the context?

The prototype is an implementation of our visionary concept, where everyday lighting is controlled through in-air gestures in a meaningful way. The system was presented and demonstrated at the TEI interaction design conference in 2015 (Tangible, Embedded and Embodied Interaction). Originally, Tangible Lights was one out of eight prototypes developed for my Master’s thesis at Aarhus University while exploring gestural interaction with light.

I'm into tech, how does the prototype work?

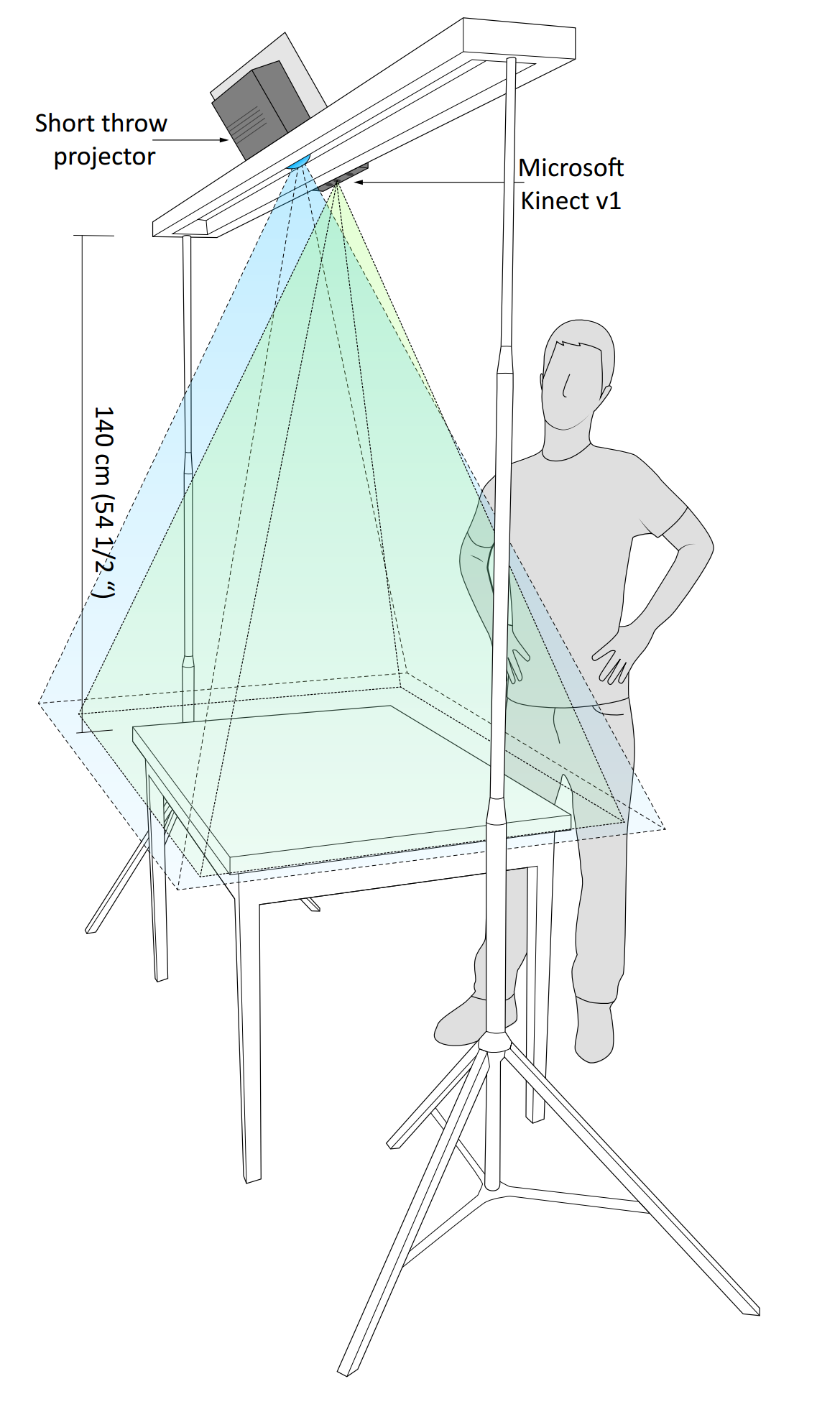

Technically, the platform consists of a short-throw projector and a Microsoft Kinect sensor mounted above the table. The short-throw projector serves as the light source as it provides an easy and dynamic way to position an arbitrary amount of illuminated regions on the tabletop. The Kinect sensor continuously streams depth maps to the gesture recognition software at 30 frames per second. Our tracking software is written in C# using OpenCV and is based on the KinectArms project.